Downloading large files when network is scarce

I recently had to download several Gb of data from a place where WiFi access is scarce. After several attempts to download an 8 Gb file and the failures that came with it (connection interruption, WiFi down) I stopped and asked myself what I could do to get those files on my client. The download usually failed when I’m around 800Mb, sometimes around 600Mb of content downloaded.

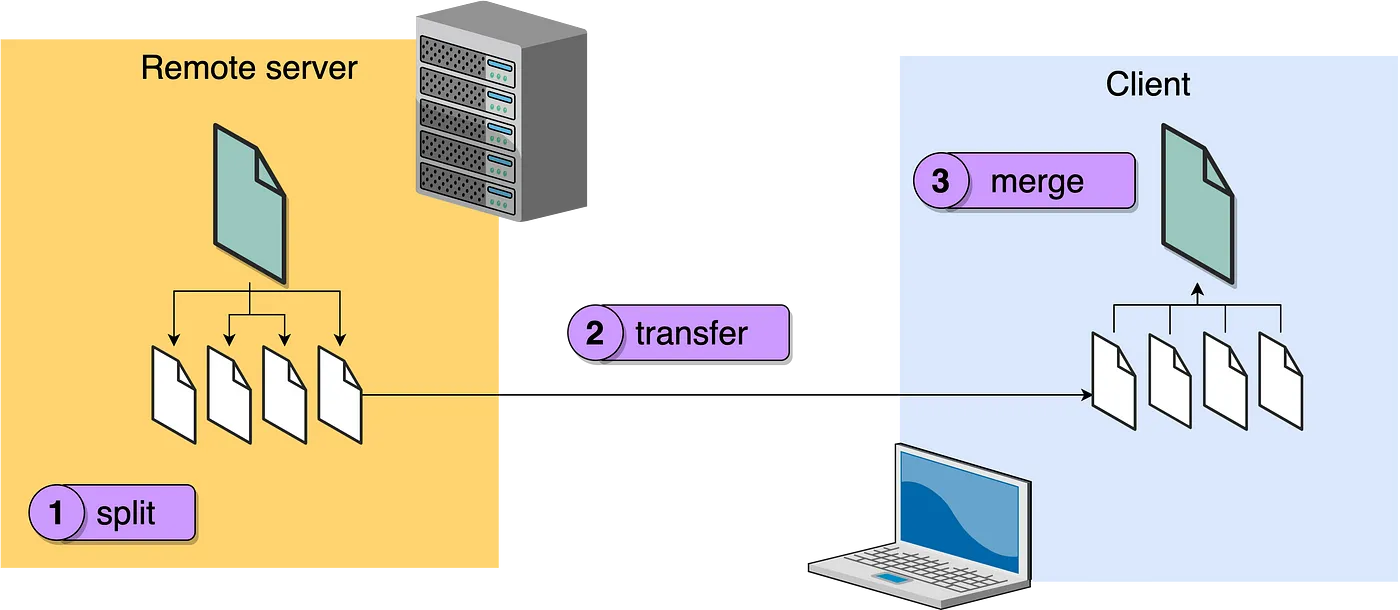

So I decided to chunk my file into chunks of 500Mb. I’ll download each of those 500Mb files and then merge them back together on my client. This 3 steps process is summarized in the diagram below.

1. Split your large file

A UNIX command is particularly useful for this. It’s the split command, duh. To split a

.tar of several Gb into files of max 500Mb each, you can use the command below on your server:

split -b 500m my-large-file.tar “my-large-file.tar.part-”# $ ls -lh

# 500M my-large-file.tar.part-aa

# 500M my-large-file.tar.part-ab

# 500M my-large-file.tar.part-ac

# 500M my-large-file.tar.part-ad

…

2. Transfer the small files

You can use your favorite transfer protocol for this. Note that tools with a GUI come in handy to

notify

you when a transfer finishes or failed (scp

will often just hang and not tell you there’s a network

failure).

3. Merge the small files onto your original large file

You can use cat to rebuild your original file. Like so:

cat my-large-file.tar.part* > my-large-file.tar

Wrap up

After one network failure, don’t waste your time trying to download a large file over a capricious network. Instead, aim to achieve continuous progress by downloading smaller chunks that you’ll merge once you got them all.