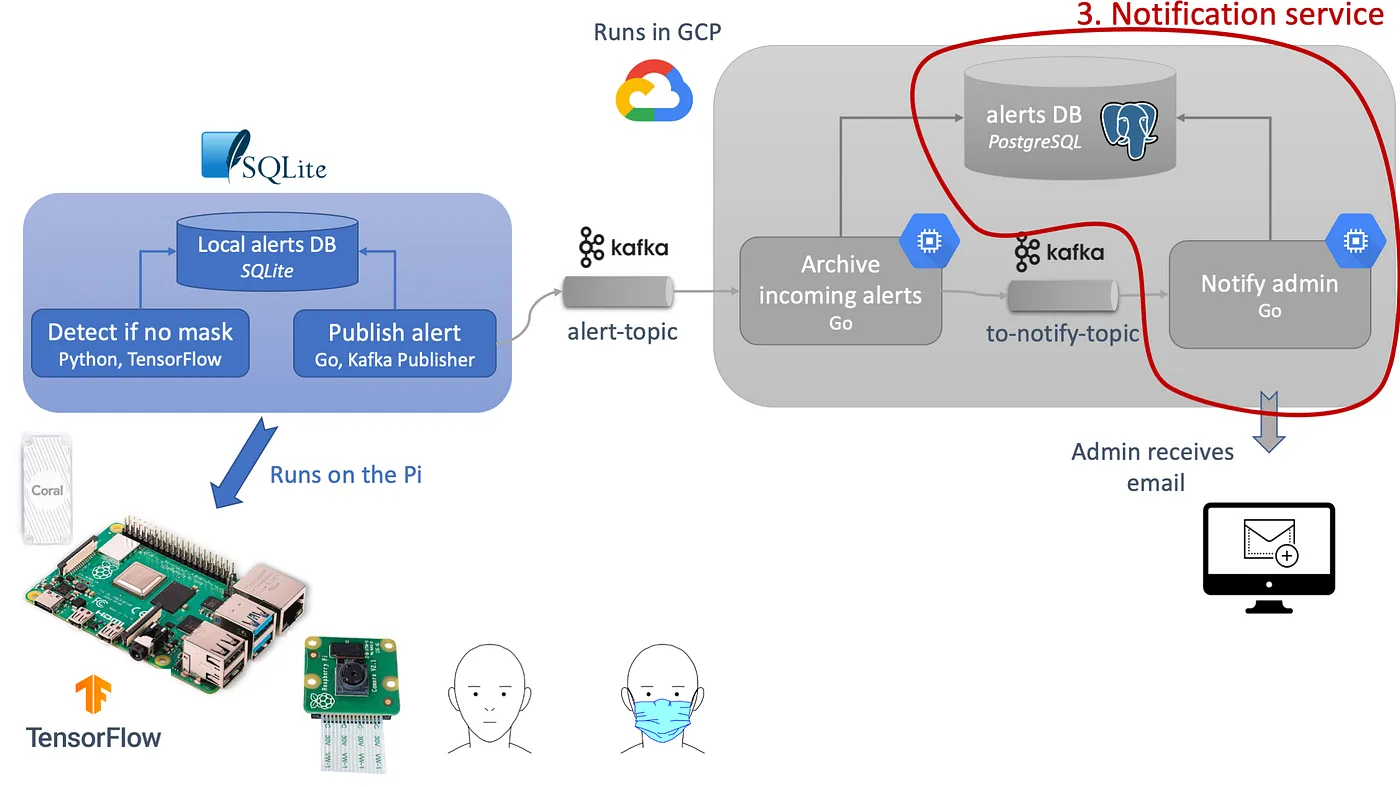

Mask Detection from the edge to the cloud — with TensorFlow and Kafka

Amidst a time of social distancing, tech renews with its promise of building a safer world. Heat cameras, mask detection mechanisms are not a solution to the COVID pandemic but can help detect areas of risk and mitigate clusters’ growth by adjusting local public policy.

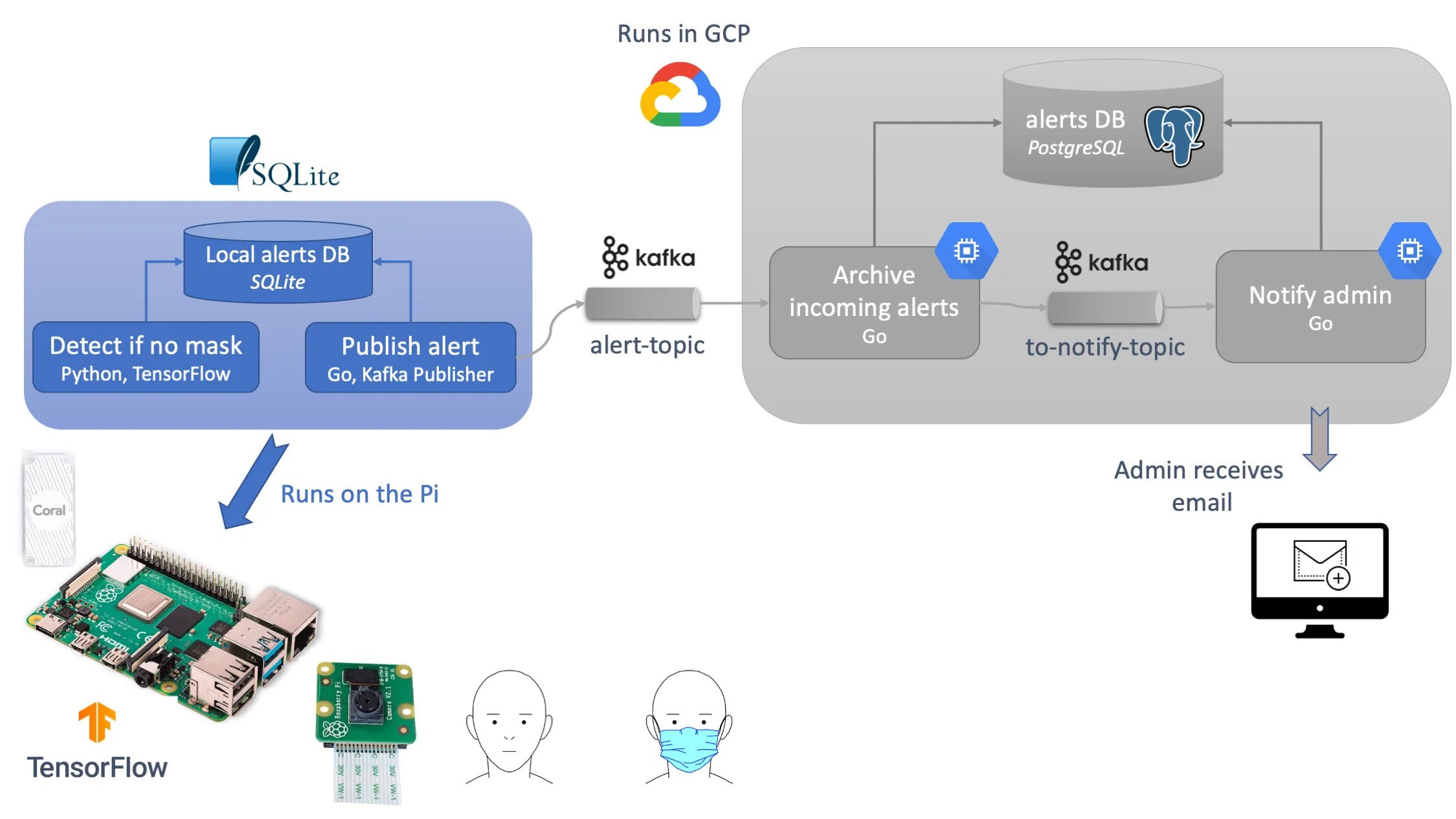

In this article, I outline how to build a system based on a network of IoT devices equipped with camera and face detection AI to identify whether people are wearing their face mask or not within a space and collecting such events.

The tools used in this project are:

- Python at the edge for the face detection model (TensorFlow).

- The Go programming language for the rest of the code.

- Kafka for asynchronous communication between services.

- Protocol buffers for schema definition and message serialization.

- SQL databases for storage (SQLite at the edge, PostgreSQL in the cloud).

You'll need a Raspberry Pi 4 with its camera for this project. I also use a Coral USB accelerator for faster inference on the Pi.

As with any architecture and choice of tools, this is an opinionated one. I slightly elaborate on the pros, cons, limitations, and advantages of the tools I chose at the end of the article.

Deep Dive

Now, let’s see how we can implement each component of this architecture. Logically, we can break down the system into three distinct parts. The full code is available from my GitHub.

- Detect whether someone is wearing a mask from a Raspberry Pi camera feed. github.com/fpaupier/pi-mask-detection

- The event collection and distribution from the Pi to a Google Cloud Engine:

- The further processing of the events: github.com/fpaupier/notifyMask

In this article, we focus on connecting the dots between those components.

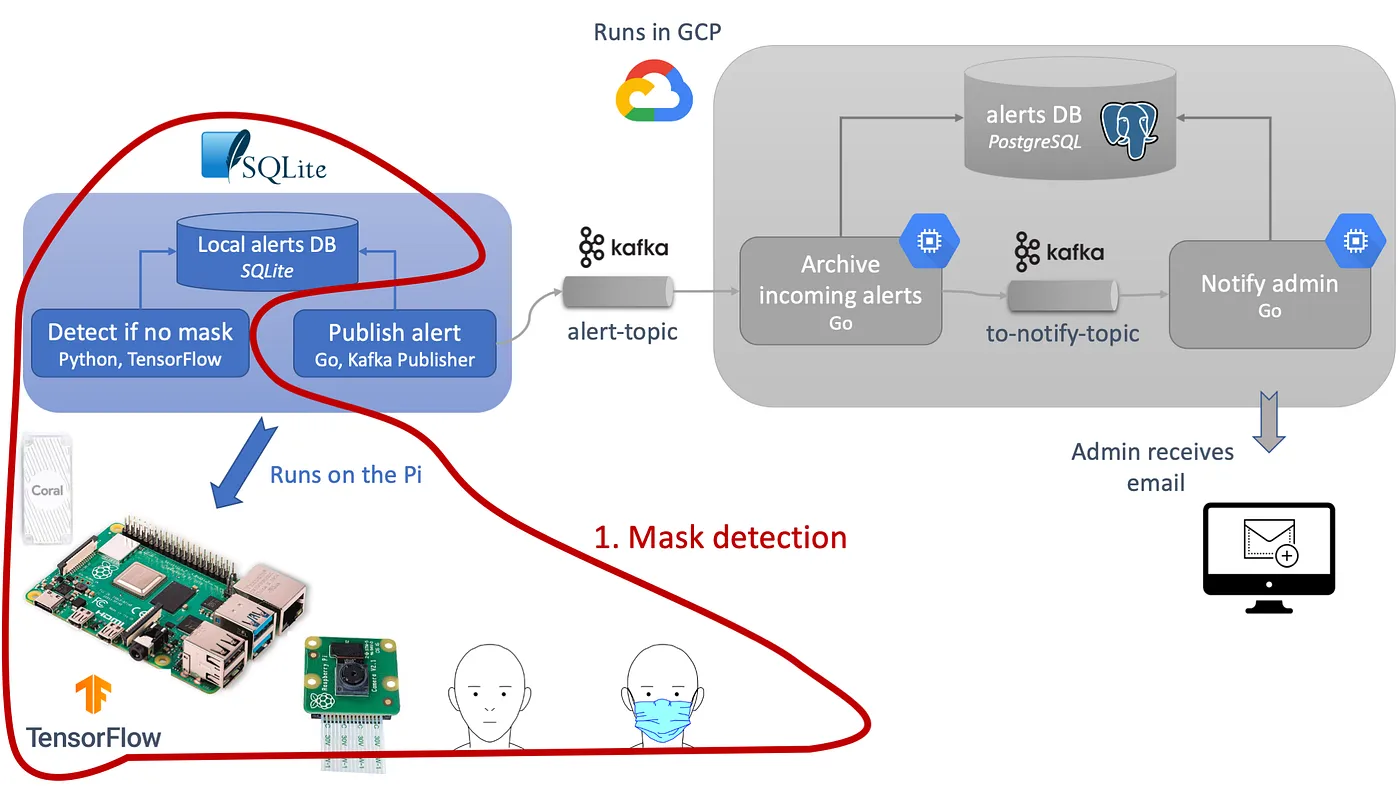

1. Mask detection

At the edge of our system, a Pi equipped and its camera detects whether an event occurs. I define an event as: “someone is not wearing their mask.”

To detect whether someone is wearing their mask, we follow those steps:

- Detect if there is a person’s face in the current footage (MobileNet v2)

- If so, crop the image to that face bounding box.

- Apply a mask / no mask binary classifier on the cropped face. (Model trained for this project)

- Create an event if no mask is detected and store it on local storage.

The logic is detailed in the main() function of my detect_mask.py

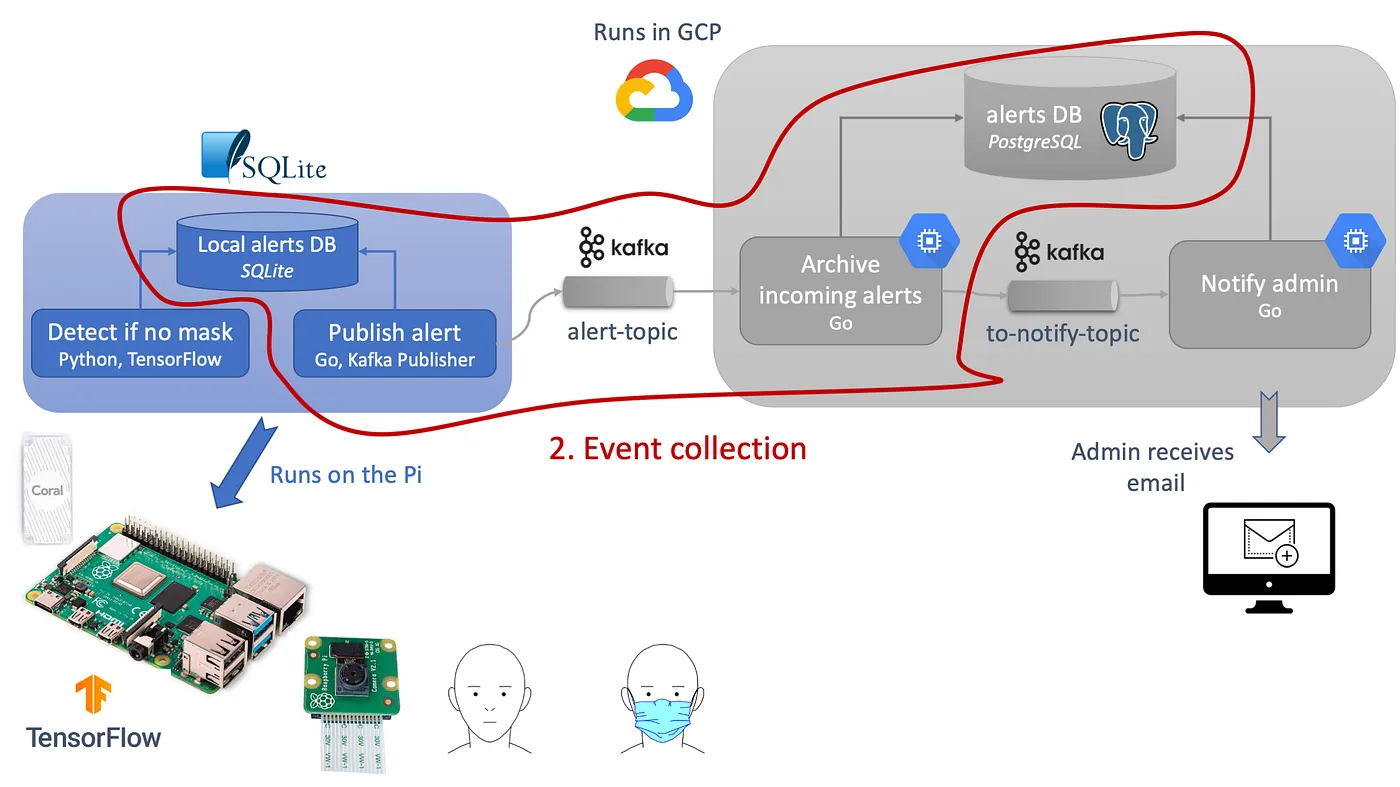

2. Collecting the events

Once the Pi records an event, we send it to a server for archiving and further processing.

Of course, we could have a notification system directly running on the Pi. Still, our goal here is to illustrate a system of several IoT devices reporting events. Further processing is done on the cloud for proper separation of concerns between 1) event collection at the edge and 2) advanced processing in a controlled environment.

Consider those two repositories for this part:

- github.com/fpaupier/alertDispatcher for the code running on the Pi publishing alerts to a Kafka topic.

- github.com/fpaupier/alertIngress for the code running on a server, subscribing to a Kafka topic and storing alerts from different devices to a PostgreSQL instance.

Each device publishes its events to a Kafka topic — “alert-topic” — for asynchronous communication between the Pi and the alert Ingress service. The event message complies with the schema defined using protocol buffers.

On the cloud, the alert ingress service subscribes to the alert-topic and archives incoming events in PostgreSQL. Then, the ingress service publishes a message the notification service will consume.

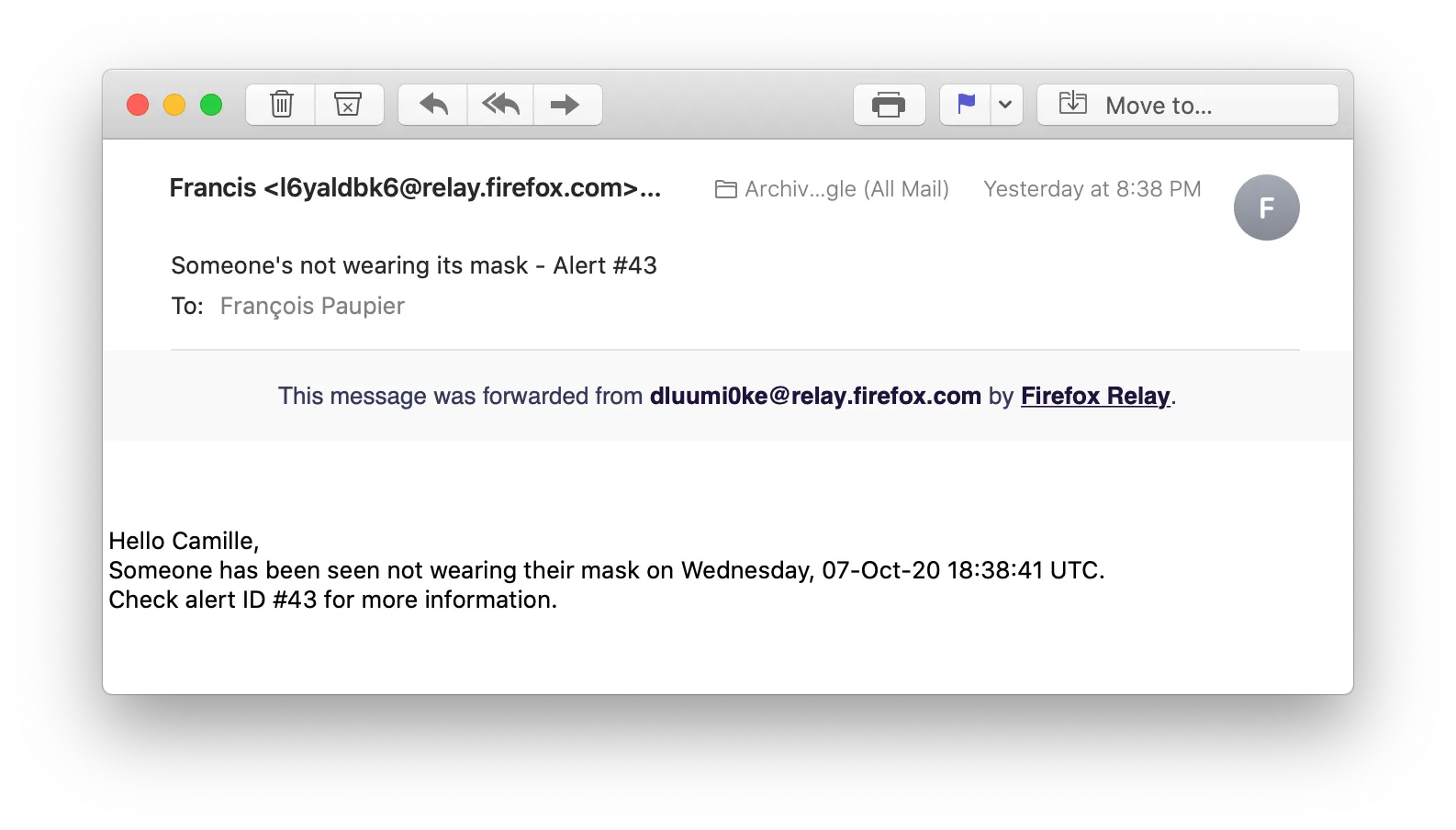

3. Notification service

You have archived the events in the cloud, now what? Let’s add a notification service, sending email to an administrator with a summary of the event.

An interesting follow-up would be to build a geo-visualization tool to analyze event per location and time

Room for improvement

This prototype illustrates the concept and is not a production-ready infrastructure. Key elements to take into account to bring this concept to production are:

- Event de-duplication — The current implementation sends multiple notifications for a single alert since we send an email for every alert raised. Someone not wearing their mask may be in the camera video feed for a while. It’s possible to implement a de-duplication strategy at the server level.

- Think of your retention policy — define a reasonable period up to which you store the alerts before deleting them. You don't want to keep sensitive data such as people face for an undefined period.

- Schema registry — A schema registry ensures consistent message definition across services. Update and backward compatibility are not trivial to deal with. What if you decide to change, add, or remove a field in an alert event; how do you propagate this change to your fleet of IoT devices?

- Machine Learning Model management — the model you push and deploy at the edge may drift and see its performance decrease over time, leading to false positives or people with their mask not being reported. Check the ML Flow project for insights on the topic.

- Device enrolling process — It's ok to manually deploy and set-up your first handful of devices. It becomes a challenge passed a dozen and a nightmare after a hundred. Think of automating the onboarding process for devices joining your IoT fleet (downloading ML models, getting Kafka credentials)

The tools

Language choice — Why Python and Go? Python for the ease of integration with TensorFlow. Go for its strong typing and native support for network related operation.

Asynchronous message queue choice — Why Kafka? Many other solutions are more lightweight in terms of memory footprint and could do the trick at the edge (MQTT). But with today’s increasing computing power at the edge, why bothering? A Raspberry Pi 4 has 8Go RAM.

Schema definition strategy — Why Protobufs? I got your point; JSON would be more comfortable to work with and would do the trick here. For the sake of a proof of concept like this one, using JSON will save lots of headaches since we’re dealing with a single device. However, schema definition and consistency is a crucial feature of an event-driven architecture protobufs make this easier.

Ethical consideration

Such ideas can help locate and mitigate clusters’ growth but potentially pose a severe threat to individuals’ privacy.

Resources about responsible machine learning become more and more accessible and I invite you to keep those guiding principles in mind when you build AI-based systems.

Also note that the General Data Protection Regulation (GDPR) strongly regulates personal data collection and processing.

Science sans conscience n'est que ruine de l'âme —

François Rabelais in La vie de Gargantua et de Pantagruel